Otter Assistant for Teams, hands on: Flexible transcription for your remote meetings

Image: Otter.ai

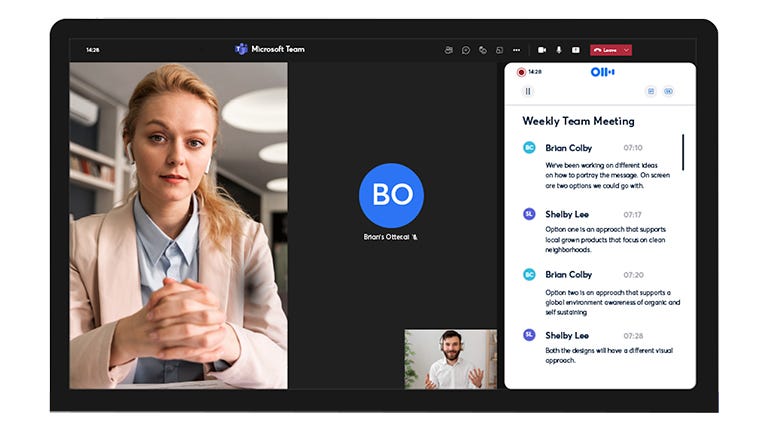

Microsoft Teams uses the speech-to-text transcription feature that’s also available through Azure Cognitive Services to offer live captions or transcriptions of meeting recordings. However, it has to be a meeting scheduled through Outlook and the transcription has to be started manually from the desktop version of Teams.

Transcription has to be turned on by the organiser or someone else in the meeting who’s in the same tenant, so if you’re invited to a Teams meeting with a partner, supplier, customer or anyone else outside your own organisation, you can see live captions if someone else turns them on, but you can’t turn on transcription and you can’t see the transcript after the meeting. That’s probably because the transcription is stored in the meeting organiser’s Exchange Online account and they can delete it.

Given those restrictions, a third-party transcription tool like Otter may actually be more useful. Rather than just running the Otter app on your phone (or in your web browser), if you have an Otter business account — which costs $20 per user per month — you can use the Otter Assistant to transcribe Teams meetings (or meetings in Zoom, Google Meet and Webex).

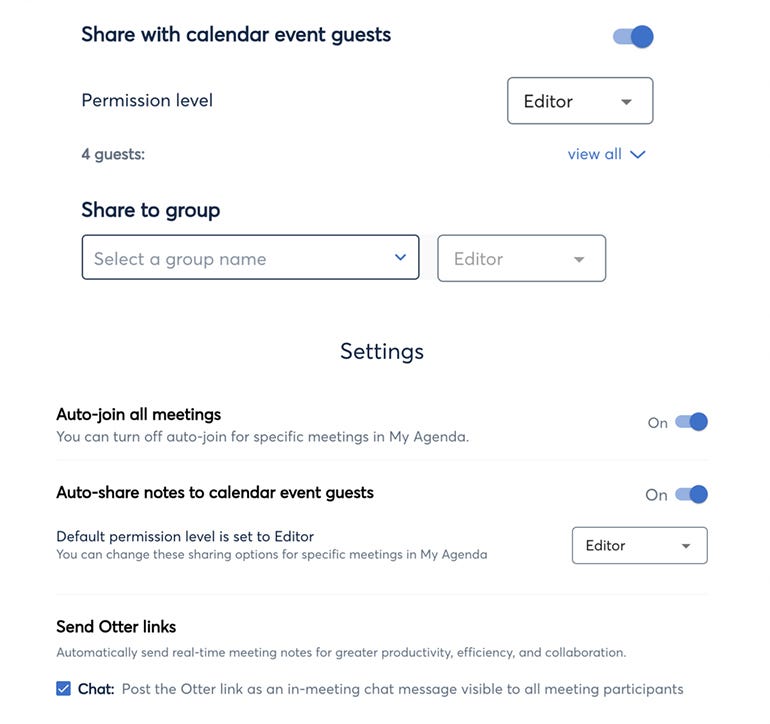

You can choose whether to share the Otter Assistant transcript in all meetings or just specific meetings.

Image: Mary Branscombe / ZDNet

Unlike many Teams integrations, this isn’t an app you install in Teams; instead, you set it up in your Otter account by connecting to your calendar (Outlook, Google or Zoom). Once Otter has synced your list of meetings, you can either have the Otter Assistant automatically join all your Teams meetings or choose the specific meetings you want to turn it on for. You can also set whether Otter should automatically post a link to the transcript in the meeting chat or send it to all attendees afterwards (which may not always be appropriate). If you choose to share transcriptions, you can set who can edit the transcript (to fix any mistakes) or add comments, and who can only view it (which includes adding highlights and exporting the text or audio).

You’ll probably want to warn people that you’re using the Otter Assistant — not only because it’s polite to let people know they’re being recorded, but also because it will show up as another attendee and will join at the official start time. So if you’re a minute late, someone may find themselves trying to talk to Mary’s Otter AI and being confused that it doesn’t reply.

If it’s a meeting where attendees have to wait in the lobby until they’re admitted, the transcript won’t start until that happens. You’ll get an email to warn you if that hasn’t happened, with a button to click to re-invite them if the bot is no longer visible in the lobby.

Unlike the integrated transcription, Otter Assistant isn’t restricted to scheduled meetings; if you jump into a quick meeting to sort something out, you can still use the assistant. Again, you’ll have to do it from the Otter dashboard, and you need the URL of the Teams invite link, which includes any meeting password.

That won’t always work for conference events hosted in Teams: for example, the Teams-based Q&A sessions at the recent Microsoft Ignite conference were set to open in the web version and not expose the meeting details in the URL (or show in our Teams calendar), so we couldn’t even try to invite the Otter Assistant.

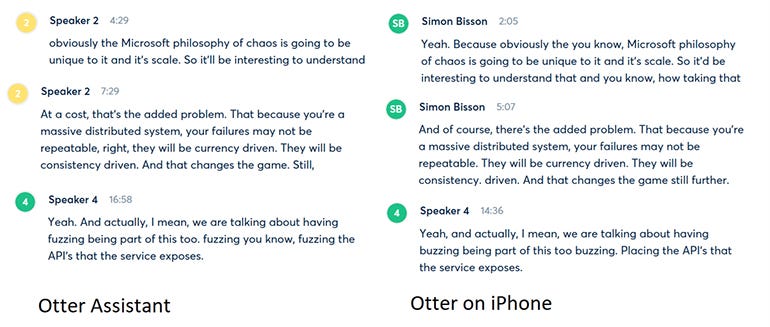

Theoretically, recording the audio stream directly should give better quality than using a device speaker and microphone. In practice, this mostly produced a more accurate transcription — for example, correctly getting ‘8 AM’ as a time rather than the ‘AM AM’ we saw in the transcription of the same meeting done with Otter on an iPhone. But in some cases, the Otter assistant missed a couple of words at the beginning of some sentences that the phone transcript captured.

Otter Assistant for Teams didn’t initially recognise speakers as well the iPhone Otter app we’d used for some time; the apps also made different mistakes in transcription.

Image: Mary Branscombe / ZDNet

Because we’d used the iPhone to transcribe previous conversations, speaker attribution was better there at first; Otter learns to recognise specific voices and remembers the names you give them, but that will be affected by the microphone and speaker combination on your devices. The Otter Assistant did recognise some voices straight away and that improved quickly as we used it for more transcriptions inside Teams. But it didn’t always detect the transition from one speaker to another as well the phone app, or catch everything at the beginning or end of what someone said. Both versions of Otter get some words wrong (‘currency’ instead of ‘concurrency’); Otter Assistant correctly recognised ‘fuzzing’, which the phone app mistook for ‘buzzing’ and ‘placing’, but the phone app recognised ‘of course’ correctly instead of suggesting ‘at a cost’.

Transcription isn’t perfect, and you’ll probably still want to take outline notes with your own action items and any key decisions, because what you get in a transcript is a flood of text including every digression and back-and-forth discussion. But if you’re already using Otter to keep track of all the useful information in meetings, you can save yourself all the fumbling with your phone at the beginning of a meeting and concentrate on having your conversation.

RECENT AND RELATED CONTENT

Zoom meetings: You can now add live captions to your call – and they actually work

Transcription service Otter launches enterprise app for teams

Popular new transcription app Otter raises privacy red flags

AI breakthrough: Otter.ai app can transcribe your meetings in real time, for free

AI transcription company becomes unicorn

Read more reviews